Creating 3D worlds for games, virtual reality (VR), augmented reality (AR), and the metaverse can be time-consuming, laborious, and expensive. However, AI tools can accelerate and simplify the process, allowing game developers, designers, and creators to focus on creativity, storytelling, and gameplay. In this article, we will introduce and review several AI tools that can be used to create 3D worlds and objects. We will discuss Point-E, Sloyd, Luma AI, Blender, and Mirage and how you can use it to create your 3d world.

Point-E: create 3d objects based on a prompt

Point-E is a tool developed by OpenAI, and is a successor to DALL-E 2. It generates point clouds, a discrete set of data points that represent a 3D shape, based on a text prompt. The tool works in two parts: first, using a text-to-image AI to convert the text prompt into an image and then using a second function to turn that image into a 3D model. Point-E is still in its earliest stages, and it will likely be a while longer until we see Point-E making accurate 3D renders. However, it is available via Github for those more technically minded, and you can test the technology through Hugging Face. For more information see our full article about Point-E.

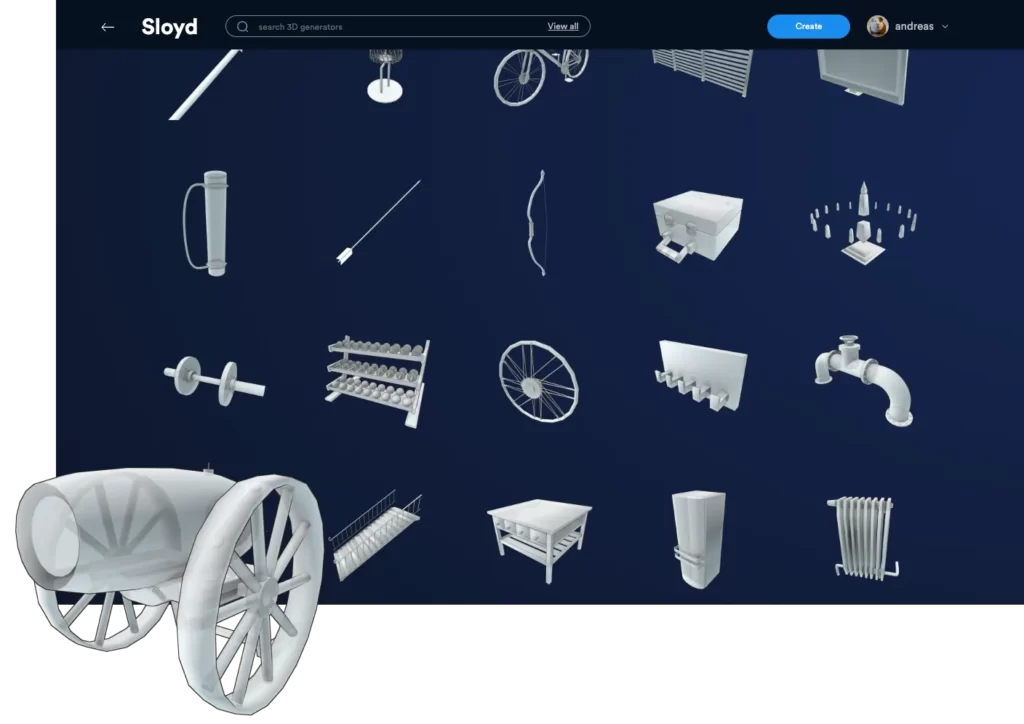

Sloyd: create 3d objects based on a prompt and modify the properties in a whim

Sloyd.ai is a 3D creation tool that uses AI to generate customizable 3D assets quickly and easily based on a text prompt. The shapes are still pretty basic: the objects look a bit like Lego blocks or IKEA furniture. However there are many configuration options to influence the properties of the object.

Pix2Pix3D: create a 3d model from a 2D sketch

Pix2Pix3D was developed by a team of researchers from MIT and Adobe. The model takes as input a 2D sketch of an object and generates a 3D model of the object. This can be used to quickly create 3D models from sketches, which can be useful in fields such as architecture, product design, and game development. The source code can be found on GitHub.

Input Edge Map

Generated Images

Generated Edge Map

Mesh

Luma AI: create 3d models based on a few photos

Luma AI is a company that develops neural rendering technology to generate, shade, and render photorealistic 3D models of products. They offer an app that allows users to capture lifelike 3D images using their iPhone. Luma AI uses NeRF, or neural radiance field AI models, to generate a 3D scene from a sparse collection of 2D images of that scene. They are passionate about Neural Rendering or NeRF research and are doing groundbreaking research and bringing the latest advances in the field. Luma AI’s app captures three-dimensional images using NeRF technology. They have also developed full volumetric photorealistic NeRF rendering on the web. Luma AI and NeRF technology can be used to showcase travel landmarks.

NeRF, or neural radiance field, is a fully-connected neural network that can generate novel views of complex 3D scenes based on a partial set of 2D images. It is a method for synthesizing novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. NeRF technology can accelerate the growth of the metaverse, providing more realistic objects and environments for VR and AR devices. It can recreate real places in 3D with just a few photographs and Artificial Intelligence. See our full article about NeRF here.

Blender

Blender is a free and open-source 3D creation software that can be used to create 3D models, animations, simulations, and games. Blender can also be used in combination with AI tools, such as ChatGPT and Stable Diffusion.

Blender: AI-powered 3D texturing

One of the most recent additions to Blender is an AI-powered texture generator that can create realistic textures for 3D objects. Carson Katri created a very impressive Blender add-on that can add textures to 3D models. This blender extension is also great for generating Stable Diffusion images for inspiration directly inside Blender!

The texture generator in Blender uses a machine learning algorithm to analyze a 2D image and generate a seamless texture that can be applied to a 3D model. The AI analyzes the color, shape, and texture of the image and uses that information to create a new texture that matches the style of the original image.

This feature can save a significant amount of time for game developers and artists who need to create many different textures for their 3D models. Instead of manually creating textures from scratch, they can use the AI-powered texture generator in Blender to quickly create high-quality textures that match their artistic vision.

Blender: create Python scripts using ChatGPT

ChatGPT can help users generate scripts for Blender, making it easier to automate certain tasks and streamline the workflow.

One way ChatGPT can assist with Blender scripting is by generating Python code. Blender uses Python as its scripting language, so ChatGPT can generate Python scripts that perform specific tasks within Blender. For example, ChatGPT can be used to generate a script that automates the process of rendering a complex animation sequence, making it easier for users to save time and effort.

Additionally, ChatGPT can also be used to generate Blender-specific scripts for objects. To give you a few ideas, check out these following links:

LatentLabs: create a 3d world from a prompt

LatentLabs is an AI-powered tool to generate realistic 3D environments based on simple text prompts, making it easier for developers to create immersive environments without needing to spend hours designing and creating every detail manually.

Conclusion

In conclusion, AI technology has made significant advancements in the field of 3D modeling and has opened up new possibilities for game developers, artists, and designers. Tools like Point-E, Sloyd, Luma AI, Mirage, LatentLabs, and Blender are just a few examples of how AI can be used to create realistic 3D environments quickly and easily.

With AI-powered 3D modeling tools, developers can create high-quality 3D models and environments in less time than it would take to create them manually. This can save significant amounts of time and effort and can allow developers to focus on creating immersive and engaging experiences for their users.

As AI technology continues to advance, we can expect to see even more powerful and sophisticated 3D modeling tools in the future. These tools will make it easier than ever for developers and artists to create realistic and immersive environments for games, VR, AR, and the metaverse.