Artificial Intelligence has been revolutionizing industries for quite some time now, and with the recent advent of OpenAI’s Point-E, designers can now create 3D models in a flash. Point-E is the newest addition to the OpenAI toolset, and it uses AI to turn your worded prompts into 3D models. In this article, we will explore Point-E’s capabilities and limitations, and discuss how this tool can transform the 3D design industry.

OpenAI's Progress with AI

OpenAI has been one of the most significant contributors to the field of Artificial Intelligence. Its two most recent projects, Dall-E 2 and ChatGPT, were game-changers in the industry. Dall-E 2 was used to create images from scratch, while ChatGPT was developed to generate long strings of text from a worded prompt. Point-E, OpenAI’s third concept, was released just before Christmas 2022, and it is already causing a stir on the internet.

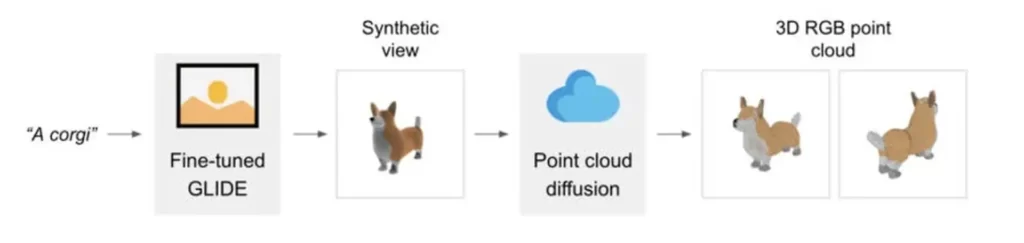

What is Point-E, and how does it work?

Point-E is a successor to Dall-E 2, using AI to generate 3D models. It works in two steps. First, it uses a text-to-image AI to create an image from your worded prompt. Then, it uses another AI model to turn that image into a 3D model. Unlike traditional 3D models, Point-E doesn’t generate an entire fluid structure. Instead, it creates a point cloud, which is a set of points dotted around a space that represents a 3D shape. A second AI model is then used to convert the points into meshes, better resembling the shapes, molds, and edges of an object. The result is a rough 3D representation of your worded prompt.. These point clouds can be used in other software applications like Blender to make it into more realistic objects.

Limitations of Point-E

Point-E is still in its early stages and is not without its limitations. As OpenAI noted in its research paper, objects can be missing points or result in blocky objects. Point-E creates low-quality images, which may not accurately represent the real-life object. The model is not yet accurate enough to replace professional 3D designers. However, the technology is constantly evolving, and with time, it will become more accurate.

Training the Model

The Point-E model had to be trained on a vast amount of data sets to get it functioning. The first half of the process, the text-to-image section, was trained on worded prompts, much like Dall-E 2 before. The image-to-3D model had to be trained in a similar way, offered a set of images that were paired with 3D models so that Point-E could understand the relationship between the two. This training was repeated millions of times. In its first tests, Point-E was able to reproduce colored rough estimates of the requests through point clouds, but they were still a long way from being accurate representations.

How to Use Point-E

Point-E is not yet officially launched by OpenAI, but it is available on Github so you can run it on your local computer or from Google Colab, for those who are technically inclined. Alternatively, it can be tested through Hugging Face, a machine learning community that has previously hosted other big artificial intelligence programs. The technology is in its early stages, and the responses produced may not be the most accurate. Therefore, it is best to expect occasional long load times or waits, as many people will likely be trying the technology via Hugging Face. OpenAI has not yet decided whether to offer the service to the public when it launches, or if it will be an invite-only occasion at first.

Application of Point-E

Point-E has the potential to transform the 3D design industry. While it is still in its infancy and cannot yet rival the work of a professional.

In the world of design, one of the most important things is being able to create accurate and realistic models of the objects that we’re trying to design. This is true whether you’re designing a new car, a piece of furniture, or a building. And in recent years, the use of 3D modelling software has become increasingly popular for this purpose.

However, creating 3D models can be a time-consuming and difficult process, requiring a lot of skill and expertise. That’s where Point-E comes in. With Point-E, designers can simply input a text prompt and let the software do the work of creating a 3D model based on that prompt.

While the technology is still in its early stages, it’s not hard to see the potential applications of Point-E. With this tool, designers could potentially create 3D models much more quickly and easily than ever before, potentially revolutionizing the way that we design everything from buildings to products.

Of course, there are some concerns about the use of AI in design. Some people worry that tools like Point-E could replace human designers altogether. However, it’s important to remember that these tools are designed to work in tandem with human designers, rather than replace them. By taking on some of the more time-consuming aspects of the design process, tools like Point-E can free up designers to focus on the creative aspects of their work.

Overall, it’s clear that Point-E is a tool that designers need to be aware of. While it’s still in its early stages, the potential applications of this technology are vast, and it’s likely that we’ll be seeing more and more of it in the coming years. So if you’re a designer, it’s worth taking the time to learn about Point-E and the other AI tools that are changing the way we work.